Preliminaries

Artificial Intelligence (AI) and Machine Learning (ML) applications are transforming Automotive Industry significantly. The global automotive artificial intelligence market is expected to reach at $8,887.6 million by 2025, from $445.8 million in 2017, growing at a CAGR of 45.0% from 2018 to 2025. Machine Learning Market is expected to reach USD 117.19 Billion by 2027; with increasing popularity of self-driving cars to propel demand from Automotive Industry.

AI and ML finds its applications across the automotive value chain. Currently, it is being implemented in automotive manufacturing, including design, supply chain, production, and post-production. In addition, AI ML is being implemented in ‘driver assistance’ and ‘driver risk assessment’ systems, which is transforming the transportation sector. Aftermarket services such as predictive maintenance and insurance are also transforming through the usage of AI. Applied machine learning in the automotive industry has created new intelligent products and optimized ways of working. The amount of data that connected cars are producing is massive and can be used to build models that predict when maintenance needs to take place or to classify “driver- behavior”.

How Artificial Intelligence (AI) / Machine Learning Works

How Artificial Intelligence (AI) Works

Artificial intelligence (AI) is a concerned with building smart machines capable of performing tasks that typically require human intelligence. AI is an interdisciplinary science with multiple approaches, but advancements in machine learning and deep learning are creating a paradigm shift in virtually every sector. Building an AI system is a process of reverse-engineering human traits and capabilities in a machine and using its computational expertise to surpass what humans are capable of.

To understand How AI works, one needs to deep dive into its various sub domains and understand how those domains could be applied into the various fields of the industry.

- Machine Learning: ML teaches a machine how to make inferences and decisions based on past experience. It identifies patterns, analyses past data to infer the meaning of these data points to reach a possible conclusion without having to involve human experience. This automation to reach conclusions by evaluating data.

- Deep Learning: Deep Learning is a ML technique. It teaches a machine to process inputs through layers to classify, infer and predict the outcome. Few of Deep Learning Algorithms are Convolutional Neural Network (CNN), Recurrent Neural Networks (RNNs), Long Short-Term Memory Networks (LSTMs), Stacked Auto-Encoders. Deep Boltzmann Machine (DBM), Deep Belief Networks (DBN).

- Neural Networks: Neural Networks work on the similar principles as of Human Neural cells. They are a series of algorithms that captures the relationship between various underlying variables and processes the data as a human brain does. Most frequently used Neural Network Algorithms are Perceptron, Multilayer Perceptrons (MLP), Back-Propagation, Gradient Descent (Stochastic), Hopfield Network

- Natural Language Processing (NLP): NLP is a science of reading, understanding, interpreting a language by a machine. Once a machine understands what the user intends to communicate, it responds accordingly. Key NLP Algorithms are Support Vector Machines, Bayesian Networks, Maximum Entropy, Conditional Random Field.

- Computer Vision: Computer vision algorithms tries to understand an image by breaking down an image and studying different parts of the objects. This helps the machine classify and learn from a set of images, to make a better output decision based on previous observations. Few of Computer Vision Algorithms are

- SIFT and SURF for feature-point extraction. Used for object recognition, Image registration.

- Viola-Jones algorithm, for object (especially face) detection in real time.

- 'Eigenfaces' approach, using PCA for dimension reduction. Used in face recognition.

- Lucas-Kanade algorithm for optical flow calculation. Used for tracking, stereo registration.

- Mean-shift algorithm for fast tracking of object.

- Kalman filter, again for object tracking, using point features for tracking.

- Machine learning algorithms like SVM's, KNN, Naive Bayes, etc. are also important in computer vision.

- Cognitive Computing: Cognitive computing algorithms try to mimic a human brain by analysing text/speech/images/objects in a manner that a human does and tries to give the desired output. Few popular Cognitive Computing Tools are SparkCognition (SparkPredict, SparkSecure and MindFabric etc), DeepMind. IBM Watson. Expert System. Microsoft Cognitive Services. Cisco Cognitive Threat Analytics.

Arising from these approaches, artificial intelligence most frequently gets categorized into the following concepts:

- Artificial Narrow Intelligence (ANI): A branch of AI that excels in performing singular tasks by replicating human intelligence, and AI’s basic concept. This type of knowledge is found in speech recognition systems and voice assistants.

- Artificial General Intelligence (AGI): AI whose purpose is general and whose efficiency can be applied to diverse tasks. This type of artificial intelligence can improve itself by learning and is the closest to the human brain in terms of capacities.

- Artificial Super Intelligence (ASI): Exceeding human intelligence, this AI concept is way more sophisticated than any other artificial intelligence system or even a human brain. The main trait of ASI is that it can contemplate about abstractions of which humans are unable to think.

5 Pre-Made AI Tech Tools, Frameworks and Templates

- TensorFlow - TensorFlow is an open-source software library that was developed by Google Brain Team. It has a flexible architecture allowing developers to “deploy computation to one or more CPUs in a desktop, server or mobile device with a single API,” although this library itself provides multiple APIs.

- Caffe - Caffe is a framework for creating deep learning systems. It was developed by Berkeley AI research team and its primary focus are networks applied to computer vision. By design, this tool maintains innovation and application because its models are configured without hard coding. Caffe also fosters active development, quick research experiments and industry deployment (Caffee can process 60 million pictures at a daily level).

- Neuroph - This open-source tool is used for creating artificial neural networks. It contains Java’s class library and with its easyNeurons tool, it can facilitate the creation and training of neural networks. Its GUI neural network editor is very convenient, and developers can use it to create their own neural network components. The neural networks conceived through Neuroph have artificial neuron layers, neuron connections, transfer function, input function, learning rule and more. This tool also has its own support for image recognition.

- Apache SystemML - This framework develops systems that are capable of machine learning using Big Data. SystemML was created by IBM and renowned for its flexibility and scalability. It allows multiple execution modes, customization of algorithms and optimization based on data and cluster characteristics. The additional levels of deep learning deployed include GPU capabilities, importing and running neural networks and more.

- Torch - Torch is an open-source machine learning library based on LuaJIT programming language. It boasts many algorithms and flexible tensors for indexing, resizing, cloning, and sharing storage, other features. With a top-notch interface, linear algebra routines, neural network models, efficient GPU support and embeddable nature, Torch is used by Facebook AI Group, IBM and Yandex, among others. Its subset, PyTorch, is an open-source machine learning library for Python and can be used for natural language processing.

How does Machine Learning work?

ML is an application of AI that can automatically learn and improve from experience without being explicitly programmed to do so. ML occurs as a result of analysing ever increasing amounts of data, so the basic algorithms don’t change, but the code’s internal weights and biases used to select a particular answer do.

The three major building blocks of a Machine Learning system are the model, the parameters, and the learner.

- Model is the system which makes predictions.

- The parameters are the factors which are considered by the model to make predictions.

- The learner makes the adjustments in the parameters and the model to align the predictions with the actual results.

- Machine learning uses two main techniques:

- Supervised learning allows to collect data or produce a data output from a previous ML deployment. Supervised learning is exciting because it works in much the same way humans learn. In supervised tasks, the computer is fed with a collection of labelled data points called a training set. The most widely used learning algorithms are Support-vector machines. Linear regression. Logistic regression. Naive Bayes. Linear discriminant analysis. Decision trees. K-Nearest Neighbour algorithm, Multilayer perceptron

- Unsupervised machine learning helps one find all kinds of unknown patterns in data. In unsupervised learning, the algorithm tries to learn some inherent structure to the data with only unlabelled examples. Below is the list of some popular unsupervised learning algorithms: K-means clustering, Hierarchal clustering, Anomaly detection, Principal Component Analysis, Independent Component Analysis and Apriori algorithm.

Two common unsupervised learning tasks are clustering and dimensionality reduction.

- In clustering, data points are grouped into meaningful clusters such that elements within a given cluster are similar to each other but dissimilar to those from other clusters. Clustering is useful for tasks such as market segmentation.

- Dimension reduction models reduce the number of variables in a dataset by grouping similar or correlated attributes for better interpretation (and more effective model training).

Python is the best programming language for ML Learning applications as it has rich in-built libraries. Other programming languages that could use for Machine Learning Applications are R, C++, JavaScript, Java, C#, Julia, Shell, TypeScript, and Scala. There are different packages for a different type of applications, as mentioned below:

- NumPy (Scientific Computing in Python), OpenCV (Real-time optimized Computer Vision library, tools, and hardware), and Scikit (Machine Learning Library for the Python) are used when working with Images.

- NLTK (Natural Language Toolkit) along with NumPy and Scikit again when working with text.

- Librosa (Python package for Music and Audio Analysis) for audio applications

- Matplotlib (Visualization with Python), Seaborn (Python data visualization library based on Matplotlib), and Scikit for data representation.

- TensorFlow (Open-source machine learning platform) and Pytorch (Open-source machine learning library for Computer Vision) for Deep Learning applications

- Scipy (Open-source Python library) used for scientific computing and technical computing

- Django (Python Web framework) for integrating web applications

- Pandas (Python programming language for data manipulation and analysis) for high-level data structures and analysis

Automotive Industry Use Cases

Design

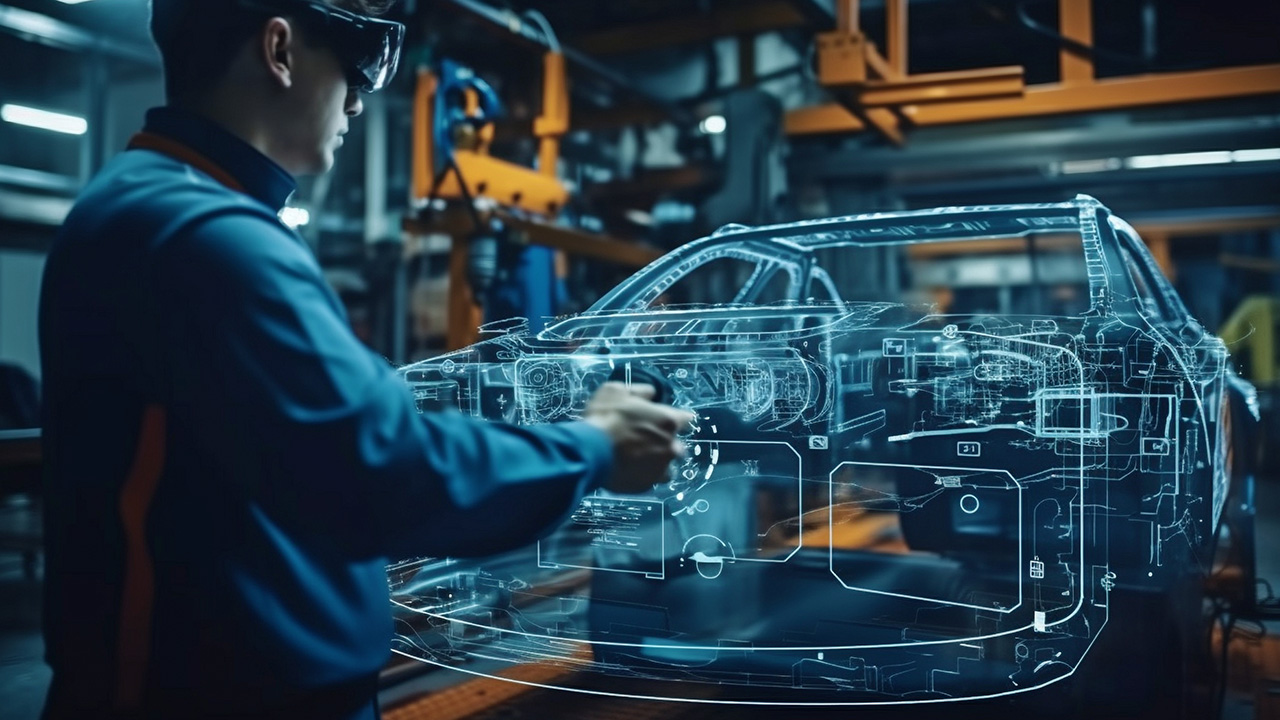

AI is now being extensively used in traditional software like Autodesk and many more. AI high dimensional features can be leveraged to conduct multiple design studies. Algorithms have also been used to generate architectural designs. The designs are not restricted to the physical world. Deep learning can now be used to design web pages, creating brand logos and many more. In the future, AI will play a massive role in R&D productivity, preventing expensive R&D projects doomed for failure from being fully realized. This translates to automotive companies saving both time and money. The use of artificial intelligence begins at the development stage for a new car. At this stage, innovative technologies work together. With the help of augmented and virtual reality, it is possible to create a more thoughtful design concept and eliminate possible errors before they become costly. An intelligent system can suggest thousands of designs for future parts and models, and auto manufacturers can choose the best options.

Here are a few use cases showcasing how OEMs are using AI to speed up their design workflows:

Nvidia’s architecture uses AI, real-time ray tracing, and programmable shading to transform the traditional product design process. Porsche created a trailer for its new 911 Speedster Concept car. Rather than ray trace rendering it frame by frame on a CPU render farm, the ‘movie’ was generated in real time inside Unreal Engine using a pair of Quadro RTX GPUs. The virtual car is fully interactive — lighting can be adjusted in real time and the car viewed from any angle, with reflections on the physically-based materials updating instantly. The advanced ecosystem accelerates new design workflows and improves how teams collaborate. This in turn, reduces the time taken for the approval of a design.

General Motors’ Dreamcatcher uses Machine Language (ML) for economic prototyping. One new prototype application integrates machine learning into CAD. This process is coined as Generative Design. The application GM is experimenting with: Autodesk’s Dreamcatcher, procedurally generates dozens of plausible designs of a given part based on a series of inputs such as required weight, strength, size, material, cost, or other performance requirements. These plausible designs can be sent directly to machining to generate test parts. Real performance data of the parts can be fed back into Dreamcatcher to optimize the program’s understanding of the performance implications of its design choices. What would normally take a designer several days to create a single design, can now be done in hours with multiple viable alternatives.

Volkswagen is also using Generative Design and is based on a specific idea or problem that you need to address for the car’s design like making it more compact without losing quality and a sense of space. Volkswagen Microbus uses components such as brackets reshaped in generative design. AI driven generative design has proven to cut time delays by a significant amount.

Manufacturing

AI-based manufacturing systems enable automakers to create and manage schedules more effectively, provide improved safety testing, and identify defects in produced components before going into vehicles. These systems enable robots to pick parts from the conveyor belt with a high rate of success. Using deep learning, the robot automatically determines which parts to pick, how to pick, and in what sequence. This can significantly help reduce the number of workforces, and, in turn, boost the accuracy level of the process. AI helps in the overall production while sensing the work of human employees and adjusting their motions to avoid injuring them. Automated Guided Vehicles can move materials around plants by themselves. They can identify objects in their path and adjust their route. There are also painting robots, which follow a pre-programmed routine and can alert quality control personnel of any identified defects.

worked with Hyundai is working on development of wearable robots (H-CEX, H-VEX, H-MEX) which aids in performing tedious tasks on shop floor:

- Hyundai Chairless Exoskeleton

- (H-CEX) is a knee-joint protective device that helps maintain a worker’s sitting position. Weighing in at 1.6kg, it is light yet highly durable, and can withstand weights of up to 150kg. With waist, thigh, and knee belts it can be easily fitted and adjusted to the user’s height.

- Hyundai Vest Exoskeleton (H-VEX) is a wearable robot that eases pressure on workers’ neck and back by adding 60kg of strength to the user when their arms are used overhead. It is expected to be very effective at preventing injury and increasing work efficiency.

- Hyundai Medical Exoskeleton (H-MEX), which assists paraplegics and elderly people with walking and traversing staircases.

AI-based algorithms can digest masses of data from vibration sensors and other sources, detect anomalies, separate errors from background noise, diagnose the problem, and predict if a breakdown is likely or imminent. Companies like Konux feed the sensor data into an AI-IoT based Predictive Maintenance System that crunches it to improve system performance, optimize maintenance planning, and extend asset life cycles. Future auto factories will only have flexible production stations supervised by unmanned systems like Audi’s vision 2035 smart factory.

Supply Chain Optimization

Automotive supply chains are among the most complex networks due to roughly 30,000 distinct parts, arriving from different suppliers across the globe. Supply chain data analytics with AI brings new and innovative data sources that help support prudent shipping decisions and minimize risk. With machine learning-driven systems, it is also possible to analyze huge data sets to rank suppliers according to on-time in-full delivery performance, their credit scoring, and evaluations which enable manufacturers to gain greater control over their supply chains, including logistics and management.

Blue Yonder uses AI techniques to optimize its forecasting and replenishment while simultaneously adjusting pricing.

- Scenario planning with AI enabled What-If Scenarios - Resilience means the ability to quickly reconfigure global and regional supply chain flows, flexibly source components from various suppliers, and constantly make trade-offs based on cost, service, and risk analysis. AI solutions helped manufacturers developing ‘what-if’ simulations and contingency plans based on demand realization and supply disruption scenarios. Powered by AI, scenario modeling enables organizations to understand the impact of decisions on service levels, cash flow, profit and loss, and the balance sheet.

- End-to-end supply chain visibility with AI enabled Control Towers. No matter how complex the supply chain, Control Tower scans the entire ecosystem to provide AI-powered visibility and real-time responsiveness. In the event of a missed part delivery, the control tower weighs options such as expediting a replacement from the original supplier or shipping from an alternate source. It evaluates the speed of different carriers and routes. It autonomously enables the optimal outcome, based on cost, customer service levels, and revenue and margin.

- Aftermarket omni-channel fulfillment with AI Automation. Service-level requirements vary greatly among channels, including dealers, distributors, e-commerce, and fleet. This means orchestrating personalized, experience-aware supply chains across a complex structure of products, assemblies, parts families, and SKUs. AI and automation capabilities dynamically optimize service and financial outcomes across all segments.

Here are few achievements for automotive companies:

- Mahindra & Mahindra relies on AI enabled dynamic segmentation capability to optimize its spare parts inventories. Outcomes include a 10% increase in service levels, a 40% reduction in response times, and a 10% increase in forecast accuracy, along with a 10% to15% reduction in inventory days.

- CEAT Tyres has digitally transformed its supply chain with AI solution to achieve accurate inventory visibility, data transparency, inventory optimization and performance, ensuring that the right products get to the right places at the right times.

- Escorts Group implemented AI solutions to unlock working capital from inventories in its agricultural machinery business. With an agile supply chain that can predict disruptions and proactively pivot its response, Escorts now has end-to-end visibility into its planning and can pivot to meet any challenges.

Quality Control

Quality control, such as inspecting painted car bodies, is slow, tedious and prone to errors. AI-based machines can detect defects more accurately than humans. Audi uses Machine Learning to recognize and mark the minutest cracks in sheet metal parts and expected to replace current optical crack detection method.

In the auto industry, the quality of every part is critical as it can make the difference between life and death in a critical situation. Using Image recognition and anomaly detection technologies, sensor-based artificial intelligence assesses the quality of every part on the production line. Defective objects are immediately removed. Moreover, the artificial intelligence of Audi recognizes not only defects but also the smallest scratches on car. Parts manufacturers can capture images of each component as it comes off the assembly line, and automatically run those images through a machine learning model to identify any flaws. Highly accurate anomaly detection algorithms can detect issues down to a fraction of a millimeter. Predictive analytics can be used to evaluate whether a flawed part can be reworked or needs to be scrapped.

To implement an image recognition and analytics model, the manufacturer needs an accurate dataset containing hundreds or even thousands of parts images, each one tagged with information such as pass, fail, issue A/B/C, etc. The data scientist constructing the model must also have domain expertise regarding allowable tolerances and the potential performance and safety impact of various flaws.

Car Dealership Experience and Monetization

AI is helping dealers become more efficient and transparent and deliver an enhanced customer experience. 18-store Keating Auto Group invested in an ML-based solution that delivers a daily list of active shoppers, including previous customers who were shopping again. This allowed them to pair online behavior with an actual shopper; salespeople used this information to proactively engage shoppers and lead them along a tailored sales journey. The investment paid off: Collectively, the group sells more than 25,000 vehicles and generates more than $1 billion in revenue every year.

Toyota also grew sales by using AI/ML to engage shoppers according to their preferences, serving up only those makes, models, trims, and offers that match each shopper’s wish list. They created personalized customer journeys through digital engagement, and the dealership was able to increase sales by 150 per cent. Using AI technology, car dealers are trying to evolve from mere transaction hubs to experience hubs.

Automotive Insurance Optimization

The automotive insurance industry is also on the verge of a tech-driven shift. The applications of AI in insurance are speeding up the process of filing claims when accidents occur.

Ant Financial created Ding Sun Bao software that analyzes vehicle damage and handles claims using machine vision. Ant Financial claims an end user can take a picture of the vehicle damage of theirs using the smartphone camera of theirs or even publish pictures of the damage into the program. Next, Ding Sun Bao compares the uploaded picture of the harm to a database of pictures marked as different severities of harm. These pictures may be also tagged with probable repair expenses. Subsequently the software creates a report for the user on the damaged components, a repair program, as well as the accident’s effect on the user’s premiums in the years following the accident.

Nexar offers smartphone and dashcam-enabled computer vision and crash detection for insurers to offer their customers. They claim the software can analyze the speed and force of collisions and use this information to create detailed incident reports.

Ping a Property and Casualty Insurance Company launched a nationwide credit-based smart auto insurance claims solution, which enables drivers to make claims through their smartphone by uploading photos for assessment by AI and receive compensation deducted from a dynamic credit quota, which is related to their driving behavior and history - including traffic violations and credit records. Car owners can check their credit quota via a mobile application developed by Ping and the quota will change dynamically based on big data like the user's driving habits.

Driver Behavior, Performance and Experience

The Advanced Driver Assistance Systems (ADAS) not only helps with car parking, auto door lock, hands-free phone calls but also gathers insights about the vehicle, driver, driving habits and the passenger. Based on these insights, ADAS makes an informed decision.

- Ford, Honda, Mazda, and Benz are developing with Driver Attention Alert, which will recognize driving behaviour through steering input and directions, right from the start of the ride and compares the learned data during later stages of the ride. Driver Attention Alert is designed to reduce accidents caused by inattentiveness due to driver fatigue. AI based system comes into play at speeds above 65 km/h and begins to “learn” the driver’s habits, watching inputs and the vehicle’s movements in the early stages before fatigue is a factor. Later, if the system detects changes in vehicle behavior that suggest the driver may be losing concentration, it will suggest a rest stop by sounding a chime and displaying a warning in the Multi-Information Display.

- Nauto creates AI sensor technology for its commercial fleets. The technology reduces distracted driving, which further decreases the instances leading to collisions by assessing driver behaviors. Nauto’s Driver Behavior Learning Platform is the solution that deploys AI in the vehicle to continuously analyze sensor data from driver behaviors, exterior hazards and vehicle movement in real-time. Nauto’s platform consists of five core modules designed that deepen Nauto’s ability to analyze driver behavior, vehicle triggers and exterior hazards : In-Vehicle Alerts, On-Demand Coaching, Claims Management, Safety Insights, Driver Engagement

- Waymo’s 360-degree perception technology detects pedestrians, vehicles, cyclists, roadblocks, and other obstacles from about 300 yards. Waymo claims users can experience a safer driving experience through the AV’s vision system (computer vision, audio recognition, and machine learning), which is capable of object and event detection and response. The vision system’s cameras constantly scan the road for moving and static objects through a 360-degree view of its surroundings. Then, the camera captures images of these objects, which the machine learning algorithms send to the database to determine what objects it sees. To train the system to recognize objects, it is fed millions of images of this object. So if a child crosses the street, the system’s collision detection and avoidance system recognizes the body crossing the street as a child and determines that the car has to slow down or stop to allow the child to cross safely before the car can proceed. Waymo also claims that its AVs feature audio recognition technology, which is capable of hearing and recognizing the sirens of fire trucks, ambulances, and police cars and police motorcycles up to hundreds of feet away, so it can make way for these vehicles. In Waymo’s case, the sensors collected samples of the emergency vehicles’ sirens at various speeds, distances, and directions to enable the self-driving cars to respond safely to emergency vehicles on the road.

Passenger Experience and Infotainment

Consumers want everything on the go, including a response from their cars. Taking this psychology of modern consumers into account, car manufacturers are developing strategies and differentiated applications to upgrade the user experience.

Ford introduced FordPass, a subscription-based dongle that plugs straight into the vehicle’s onboard diagnostics port. Ford has announced the mobility and connectivity solution FordPass for its customers in India. The company said that all BS6 Ford vehicles will come with a factory-fitted, cloud-connected device that will communicate with vehicle owners in real-time via the dedicated FordPass smartphone application. The said cloud-connected device will work on a 4G data connection that will be paid for by Ford for unlimited use for three-years from the date of purchase of their vehicle. FordPass members can talk to personal mobility assistants – FordGuides – 24X7 to help resolve their mobility challenges, whether finding a more efficient way to get around or booking parking before reaching their destination.

Hyundai is developing a new in-car infotainment system that includes a personalized audio search experience and playlists, which is accessible to customers via voice commands. Audioburst uses AI technology to extract the best bits from podcasts and talk radio to create new listening experiences, has raised strategic funding from Dentsu and Hyundai Motor. Audioburst ingests and indexes millions of audio segments per day, then uses AI technology — including Automatic Speech Recognition and Natural Language Understanding — to create products like a searchable library of audio, personalized audio feeds and news briefs, notifications and more. Through an API, partners can integrate Audioburst’s personalized feeds into their own smart speakers, mobile, in-car infotainment systems and other products.

Conclusion

OEMs trust the efforts and expenses involved in adopting AI ML are worth it. Even though challenges still exist, like the complexity of software development or various compliances and regulations, different fields within the automotive industry are already leveraging AI ML and seeing increased efficiency and optimization of processes. To cover the market’s need for an all-encompassing in-vehicle user experience with enhanced safety, automakers are willing to abandon traditional ways and focus on the importance of software and data. Investing in the autonomous manufacturing units and technology personnel right now will help manufacturers to get acquainted with the advanced AI ML technologies that will be used in autonomous vehicles in near future. Players that take cognisance of this trend and act now will certainly reap enormous benefits in the future.